Many industries grapple with unknowingly using AI-generated content.

In higher education, for example, educators want students to write original content so they can evaluate students’ unassisted skills. So their use of AI content detection tools makes sense.

But what about marketing? Does it matter whether a writer uses generative AI, such as ChatGPT, Google’s Bard, Microsoft’s Bing, or others? What if a freelancer turns into a piece written by a generative AI tool? Should they be paid the same as if they wrote it from scratch? What if they use AI as an assist and recraft the content?

The disadvantages of AI-generated copy have been written about and discussed at length. Among the highlights, AI writing tools:

- Rely on existing information – content already created. They don’t develop something creative and new, providing less value for readers.

- Can generate fake information. You can’t publish the content without conducting thorough fact-checking.

- Create duplicate content and copyright issues if the AI system receives too many similar requests. Identical content harms search engine optimization (SEO), e-commerce CRO, and the publisher’s reputation.

Google caused some confusion when it called AI mamaterials spam. But its search advocate John Mueller clarified that machine-created content would trigger a penalty if poorly written, keyword-stuffed, and low quality – the same penalty applied to human-created content with those attributes.

Recently, a freelance writer started a discussion on Twitter to shed light on how these AI tools affect client relationships. It highlighted how clients withheld payment because they accused the freelancers of using AI-writing tools (even though they didn’t.)

It’s happening…

Clients are accusing writers of using AI writing tools when they never have. They plug your content into ONE highly inaccurate AI detector and that’s the be all end all to this discussion. No payment and they say no more to your writing.

Cringe…clients…

— Elna Cain | elnacain.com (@ecainwrites) May 4, 2023

But how did the companies conclude the creations came from AI? More than likely, they used AI detection tools. They may seem like a handy checker, but are they the best approach? Yes, they could prevent misinformation and plagiarism. But they also, as those freelancers found, may promptly unfounded accusations of plagiarism.

Take both perspectives into account if you use AI detectors and ensure you understand the limitations.

Testing AI content detection tools

Tools designed to distinguish between human- and AI-generated content may perform a linguistic analysis to see if the content has issues with semantic meaning or repetitions (an indicator of AI’s involvement). They also may conduct comparison analyzes – the system uses known AI-generated text and evaluates the content to see if it resembles it.

#AI-content detection tools see if the text has issues with semantic meaning or repetitions. They also check it against existing AI content, says Kate Parish via @CMIContent. Click To Tweet

For this article, I tested four popular AI checkers by submitting two pieces of content – one AI-generated and one human-created. Here’s what I found:

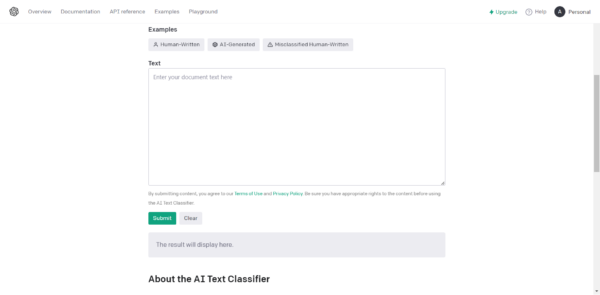

1. AI classifiers

OpenAI, developers of ChatGPT, also created AI Classifier to distinguish between AI-generated and human-written text. Users paste the text in the open box and click submit. However, it requires at least 1,000 characters to complete the assessment and only works for English text.

imagesource

OpenAI says its tests indicate the classifier’s conclusion elicits a true positive rate (likely AI-written) only 26% of the time, making it unreliable. It also says the system incorrectly identifies human-sourced content as AI in 9% of cases.

@OpenAI says its #AIClassifier detects likely AI-written #content only 26% of the time, says Kate Parish via @CMIContent. Click To Tweet

Given OpenAI collects feedback from users, the AI Classifier system may improve. Now, let’s see what happened with my test.

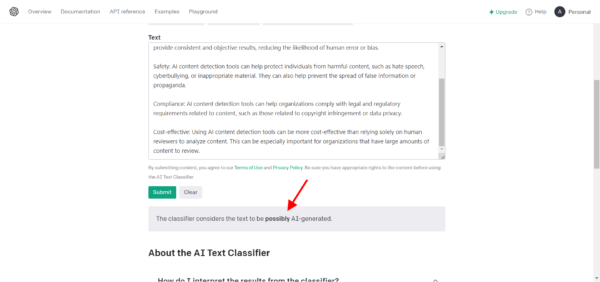

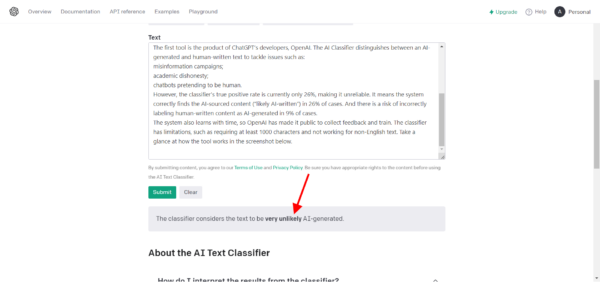

AI-generated text conclusion: Accurate. “The classifier considers the text to be possibly AI-generated.”

Human-generated text conclusion: Accurate. “The classifier considers the text to be very unlikely AI-generated.”

Price: Free

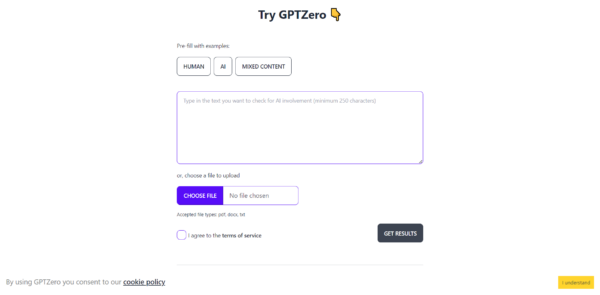

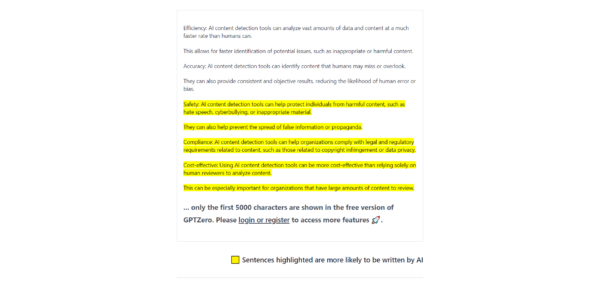

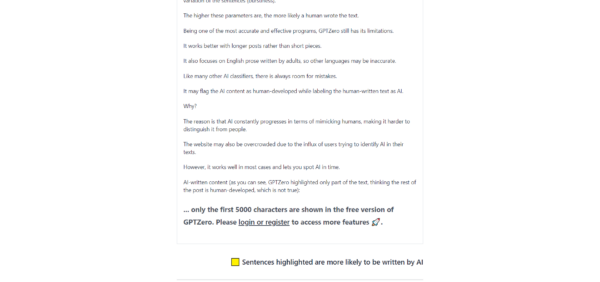

2. GPTZero

GPTZero calls itself the world’s No. 1 AI detector with over 1 million users. It measures AI involvement based on text complexity (perplexity) and sentence variation (burstiness). The more complex and varied, the more likely a human wrote the text.

GPTZero still has its limitations. It works better with longer posts rather than short pieces. It also focuses on English written by adults, so its conclusions for other languages may be more inaccurate.

Users paste their text into the box or upload a file, then click the get results button.

imagesource

AI-generated text conclusion: Not accurate. It highlights the text it considered AI-generated, but mistakenly thought a human developed the first four paragraphs.

Human-generated text conclusion: Accurate. It didn’t indicate any sentence was more likely to be written by AI.

Price: Free

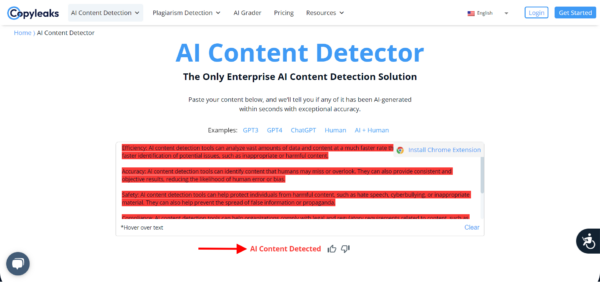

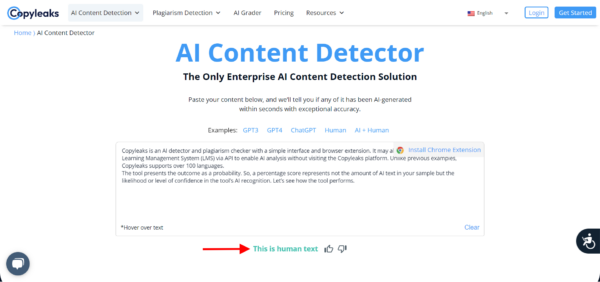

3. Copy leaks

Copyleaks detects AI and plagiarized content. It can be used on its site, as a browser extension, or integrated into your website or learning management system.

It supports over 100 languages. Copyleaks returns a percentage probability about its confidence to detect AI-generated content.

An AI-sourced text (the tool completed the task successfully):

imagesource

AI-generated text conclusion: Accurate. It highlights all the text in red to indicate AI content detected (showed 96.5% probability for AI).

Human-generated text conclusion: Accurate. As it states, “This is human text” (98.2% probability for human).

Price: Free

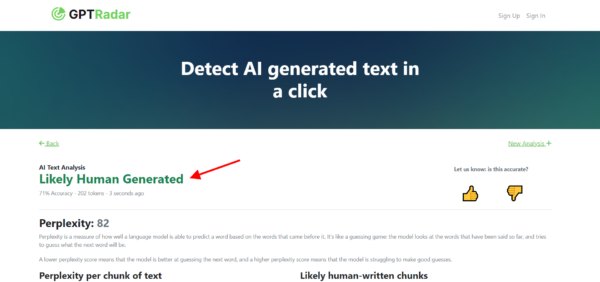

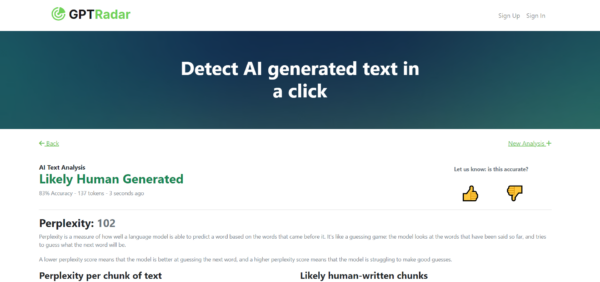

4. GPT Radar

GPTRadar has an easy-to-use straightforward interface. Its assessment includes a conclusion and a text-perplexity score to indicate how well it could predict the words.

@GPTRadar concludes whether #content is likely human- or AI-generated. It also adds a perplexity score to the assessment, says Kate Parish via @CMIContent. Click To Tweet

Perplexity ranges from one to infinity. The lower the perplexity score, the more likely the text is AI-sourced. The system also separates parts of the texts and marks them as human- or AI-generated.

imagesource

imagesource

AI-generated text conclusion: wrong It marked it as “likely human-generated” and gave it a perplexity score of 82.

Human generated text conclusion: Accurate. It identified the text as “likely human-generated” and gave it a perplexity score of 102.

Price: Free 2,000 tokens (about 2,500 words); two cents per 100 tokens

What’s ahead

As AI-developed content tools increase, more solutions for detecting it will follow. But the caveats remain – no tool can be 100% accurate.

You must assess if the detection tools are necessary for your content marketing. Will you be like Google, which says quality, accuracy, and relevance of the content matter more than AI’s role in the creation? Or will you decide AI’s involvement matters more to your objectives?

All tools mentioned in the article are identified by the author. If you have a tool to suggest, please feel free to add it in the comments.

HANDPICKED RELATED CONTENT:

Cover image by Joseph Kalinowski/Content Marketing Institute

.

Follow us on Facebook | Twitter | YouTube

WPAP (697)